Camera phones have come a long way very quickly. Developments in sensor technology coupled with the use of AI algorithms allow smartphone cameras to produce images with more accurate colours, reduced noise, and more fine detail.

At the heart of this technology is a process called ‘pixel binning’. Even technically astute photographers might find themselves asking, “What is pixel binning?” It’s a relatively new concept in sensor technology and is worth knowing about before upgrading to your next smartphone.

What is pixel binning?

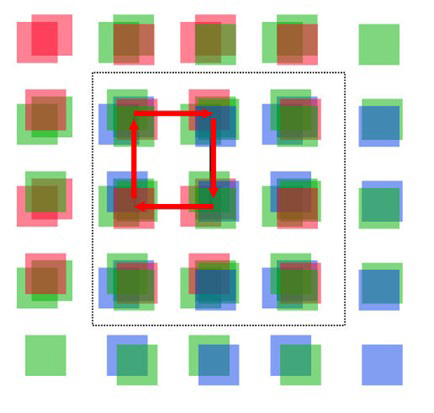

Pixel binning is a process by which the sensor groups – or ‘bins’ – adjacent pixels together to make one larger pixel. This reduces the amount of noise. The most common array, for example, is 2×2 binning (some smartphone manufacturers employ 3×3 and 4×4 binning), which combines four pixels into one. In this array, the sensor is effectively reducing its pixel count by three quarters. This also reduces image resolution and the amount of information the sensor can record.

Why would you want your sensor to do this? By processing less data, the lower levels of noise in ‘binned’ images is perfect when using a smartphone’s small sensor in low-light conditions.

In short, pixel binning is a process by which smartphone manufacturers can increase image quality without increasing the size of the sensor. To answer the question of ‘what is pixel binning’ more thoroughly, it helps to understand the nature of image sensor size and design.

Understanding sensor size

It may sound like common knowledge, but too often we forget how much a camera’s sensor size affects image quality. Of two cameras with the same pixel count, the one with the physically larger sensor will produce higher quality images. This is because the individual pixels on a larger sensor are larger than those on a physically smaller sensor. For example, the individual pixels on a full-frame sensor will be larger than those on a 1-inch type sensor.

It helps to think of your sensor’s pixels as light buckets – the larger the bucket, the more light it can collect, and thus more detail it can record from a scene.

When a pixel collects light, the sensor converts it into a digital image signal. Therefore, the more light a pixel can collect, the stronger the image signal it can produce.

Because smartphones are slimline by design, they have to accommodate very small sensors. These sensors have small pixels. Smaller pixels that capture less light produce images with high levels of noise and poor colour rendition, particularly in low light. Therefore, manufacturers use pixel binning to artificially increase the size of each pixel, allowing the sensor to record more light.

With pixel binning, how many megapixels does my smartphone camera actually have?

Good question. Because pixel binning means merging together a group of smaller pixels into one larger pixel, you effectively reduce the pixel count. Manufacturers of phones that support pixel binning aren’t always clear about this when explaining their phone’s pixel count. This is because consumers correlate a higher pixel count with better images: which is to not understand that sometimes fewer, bigger pixels are better.

For example, the iPhone 14 Pro supports pixel binning. On paper, the iPhone 14 Pro’s 48-megapixel camera is the highest resolution camera ever in an iPhone. However, when it groups its pixels in a 2×2 array, as we talked about above, it’s cutting its pixel count by a quarter. Therefore, its camera is ultimately able to produce a 12-megapixel image with pixel binning.

Ideally, a smartphone will have the option to turn pixel binning on and off because, when using it in ideal light conditions, you’re not using your sensor to its full potential with pixel binning enabled. Thankfully, most smartphones now allow you to turn pixel binning off and on. But the default setting is usually to have it on. So make sure you switch it off before taking what you hope will be a high-resolution image.

Why don’t manufacturers just make fewer pixels?

You might be asking yourself, what is pixel binning good for? Why don’t smartphone manufacturers just make sensors with fewer, but larger, pixels? It’s a very good question! There are two prime reasons.

First, it’s nice to have the option to shoot at a higher resolution if the conditions call for it. Say you’re hiking through Yosemite on a bright summer’s day and have a terrific view of El Capitan in all its glory, wouldn’t you want to capture a rich, detailed image that uses the full potential of your sensor? Pixel binning off. Yet, if you stay in that spot until sunset to capture the cliff bathed in low light, you might want to shoot an image at a lower resolution that produces less noise: pixel binning on.

And secondly, pixel counts matter to consumers. To most people upgrading their phone, they’ll be more drawn to Samsung’s headline-grabbing 200MP sensor than a longer description of the better signal-to-noise ratio that can be achieved when shooting pixel-binned images at a quarter of that resolution. It’s something like a political soundbite: punchy and effective, but specious.

What about the Bayer filter in pixel binning?

Pixel binning may be new to the mainstream, but astronomers and astrophotographers have been using it for decades. When digital imaging emerged in the early 2000s, photographers used monochrome Astro-imaging cameras that had pixel-binning options. They would combine images shot with red, green, and blue filters that cover every pixel, making it straightforward to produce the combined image.

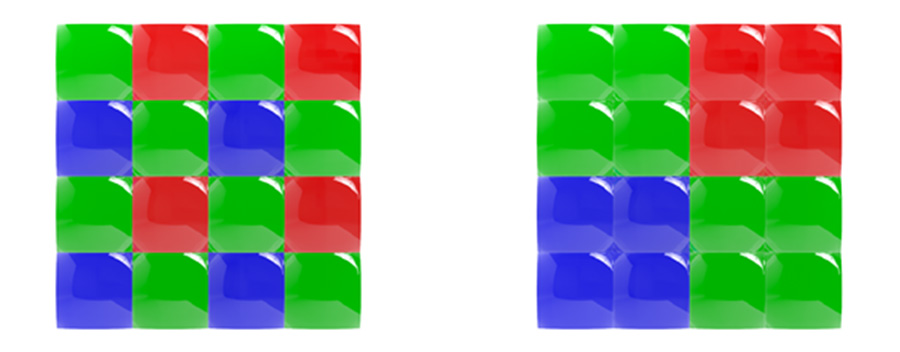

Modern digital cameras, however, use a more complex setup. They are also monochrome cameras but use colour filter arrays to produce images with the accurate tones we’ve come to expect. Until recently, nearly every camera used what is called the Bayer filter array – a sort of checkerboard pattern for arranging RGB colour filters on 2×2 grid of pixels.

This grid consists of two green filters sat diagonally from each other, and red and blue filters over the other two pixels. Normally, a camera working with a Bayer filter array will compute the sum of all this colour data into one value, but with pixel binning this doesn’t work. Manufacturers needed a way to bin each colour individually.

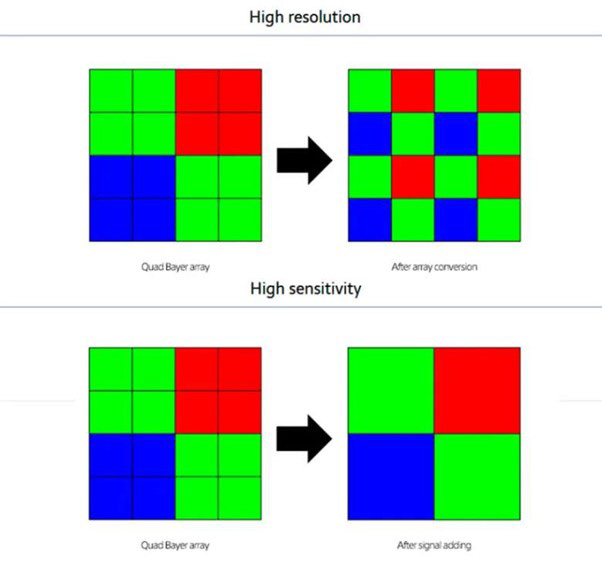

To this end, manufacturers designed what’s called the quad-Bayer array where each 2×2 grouping of pixels is assigned a single colour. Four of these are then grouped together akin to the original 2x green, 1x blue, 1x red Bayer filter array. The diagram below from Sony’s Semiconductor unit illustrates how this works.

The quad-Bayer array (which Apple is referring to when it uses the term ‘quad-pixels’) not only allows smartphone manufacturers to preserve colour data in the pixel binning process but has also enabled them to introduce other innovative features such as HDR Photo mode.

When to use pixel binning

Pixel binning is most useful when shooting in low-light conditions. This is when you’ll encounter the most noise. In practice, most of us don’t often need to shoot at the highest resolution with a smartphone.

On overcast days or even sunny days with lots of contrast, you might find that shooting with a lower resolution but bigger pixels helps you produce better images with a fuller range of tones.

It’s also worth noting that pixel binning does not support raw capture. So, if you’re looking to shoot raw files for greater flexibility in post-production, your smartphone camera can only output raw files that haven’t been pixel binned.

Which phones use pixel binning?

Each year, manufacturers across the board implement pixel binning in more and more smartphones. As a general rule, a quick way to tell if a device offers pixel binning is if its camera has a really high pixel count. The 200-megapixel Samsung Galaxy S23 Ultra and 108-megapixel Samsung Galaxy S21 Ultra, for instance, use pixel binning, as does the main 50MP sensor on the Google Pixel 7 Pro (50MP binned to 12.5MP), its telephoto camera (48MP binned to 12MP). The recently announced Google Pixel 8 and Pixel 8 Pro with 50MP main camera sensor (50MP binned to 12.5MP).

And it’s not just rear cameras. Many manufacturers now offer pixel binning in the front selfie cameras, as well. Which makes sense when you think about it. You’re most likely going to be taking a selfie in one of two places – on holiday or out at night with friends. In those latter low-light conditions, a pixel-binned image will be a lot clearer and have better colours.

What are the common pixel binning arrays?

The 4-in-1 (2×2) array is most common. You’ll often see this 48MP cameras binned to 12MP or 64MP to 16MP. But some brands, like Samsung, use a 9-in-1 array, binning a 108MP camera to produce 12MP images.

As already mentioned, Samsung also has a 200-megapixel sensor that use 16-cell pixel binning to produce a 12.5MP image. You’ll find this sensor being used in different cameras, but not all of them use the same size sensor, so the results can vary from phone to phone.

FAQs

Below we’ve answered the most common questions people have about pixel binning.

What is the advantage of pixel binning?

The benefit of pixel binning is you can combine a 2×2 pixel array of 4 pixels into one larger pixel. This produces cleaner images with lower noise in low-light conditions.

What is the disadvantage of pixel binning?

The negative aspect of pixel binning is that you have a lower resolution image. So while there is less noise in your image, there’s also likely less detail. The Sony A7S series opts for a 12mp sensor as the quality of a pixel binned sensor outputting 4K isn’t as good as a native resolution sensor. However, you’d be hard pressed to tell.

Do DSLRs and mirrorless cameras use pixel binning?

Camera manufacturers could incorporate pixel binning technology into mirrorless cameras and DSLRs, but it hasn’t been done to date. The closest technology to date is the Pixel Shift High Resolution modes seen in some cameras. In some cases it doesn’t increase the pixel count of the image but increases the quality through combining multiple images. In other instances the pixel count is increased, but not by the multiple of the number of images captured and combined.

If fewer, but larger pixels are better, why do manufacturers make sensors with high pixel counts?

If a 12MP sensor is proven capable like that in the Sony A7S III or even the Nikon D300s of yesteryear, then a 12-megapixel sensor is fine – provided you only want to look at your images on a digital screen. The issue is printing. To print images larger than A4 or A3, you will require more resolution.

When 12-megapixel images are displayed on a smartphone screen, the resolution of those screens is often insufficient to see the entire image at full resolution. However, to view an image from a 108MP camera such as Samsung’s in print, then the print size will be that much greater. A 12-megapixel image at 4621 x 2596 printed at 300ppi is 39.12 x 21.98 cm. A 108-megapixel image would be 13,865 x 7789 and print at 117.39 x 65.95cm.

There are also considerations when scaling. If you print an image from an iPhone 11 Pro vs one from an iPhone 14 Pro, the higher resolution captures far greater detail and image width dimensions of the latter almost double.

Higher resolution and a larger physical sensor give you more flexibility to make adjustments to your images in post-production. Also, the noise that comes from using high ISO settings is random. Combining the signal from four pixels to create one imaging pixel is likely to make a better-quality image. The sensors in smartphones are small, so the pixels are small. This makes high ISO noise more of an issue than it would be with a larger sensor.

Featured image credit: Steve Johnson via Unsplash.

Related articles:

Follow AP on Facebook, Twitter, Instagram, YouTube and TikTok.