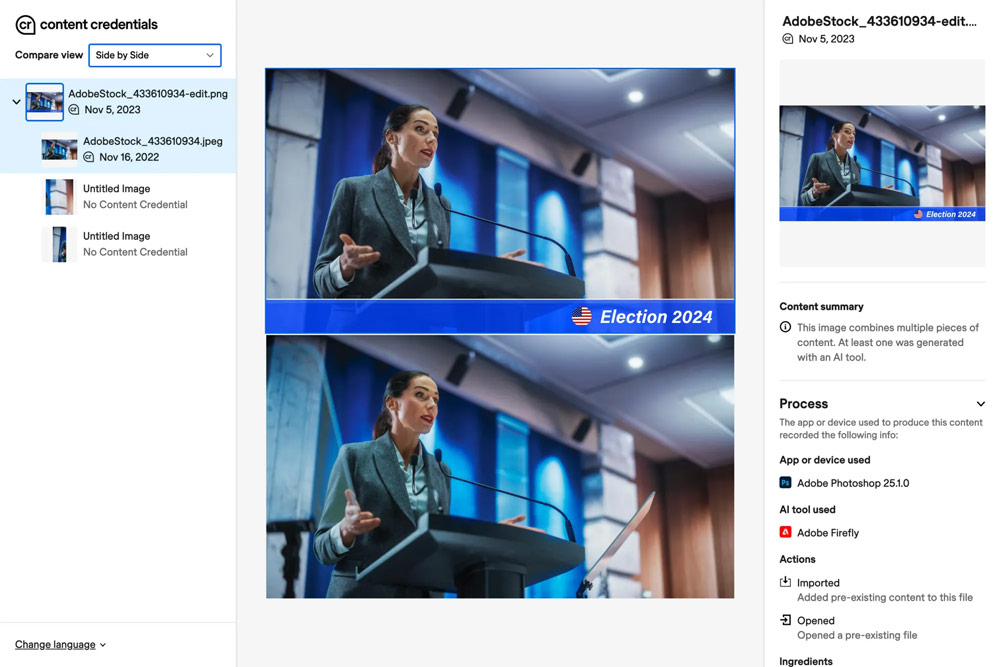

With the latest version of Adobe’s Firefly generative AI tools promising even more realistic image generation via Photoshop and other programs, the photo-editing giant has also announced more details of its content authenticity app and Chrome browser extension

During our show report from Adobe MAX in the US last autumn, we revealed how the software giant is working to make it much easier to see how much AI was used in an image, if it all.

Adobe finds itself in a tricky position, as it’s under commercial pressure to keep on innovating with AI, but is also being accused of making photo-realistic AI images so good (and easy to generate) that they could destroy the livelihoods of a lot of photographers. Why pay a photographer to take a product photo, for example, when you can get your computer to do it by typing in a few prompts?

Don’t you train your AI engines on my pictures!

At the same time, there is also widespread worry that generative AI tools might somehow harvest one of your photographs for generative AI purposes, as they are let loose (‘trained’) on the Internet. Adobe insists that it only trains its generative AI tools on commercial content where it has permission to do so, but the Internet rumour mills and conspiracy theorists are never quiet for long.

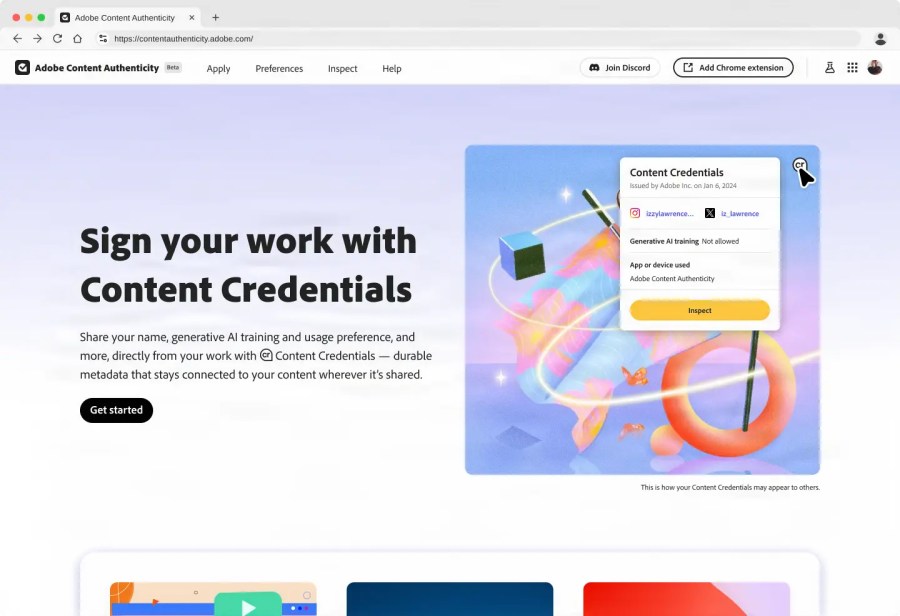

Against this background, we caught up with Adobe’s Andy Parsons at the recent Adobe MAX London event to get up an update on what it’s doing to make it easier to see the ‘content credentials’ of an image – and how photographers can start using the new Chrome browser extension right away to make sure their pictures are clearly labelled and only used how they want them to be.

Get the latest on Content Credentials and using the new Chrome browser extension

Further reading

Adobe: good lenses will always be best for high-quality images, not AI

I’m a photographer, and this is why I WON’T be boycotting Adobe