When the influential French painter Paul Delaroche roved his eye across a Daguerreotype around 1840, he bluntly declared: ‘From today, painting is dead.’ Painting survived and photography found its role as a new way to create and see. Since its inception, photography has faced myriad changes and challenges from technological developments. More recently these have come from multimedia, videography, digital photography, the omnipotent internet, Photoshop and smart phones among others.

The latest disruptive technology to flip the photography world upside down and turn inside out is Artificial Intelligence (AI). And there’s already a lot of AI in photography: DSLRs, mirrorless cameras and mobile devices use facial recognition, autofocus and tracking.

The rise of AI in photography

The Olympus OM-D E-M1X was one of the first cameras that features deep learning technology to identify subjects and track them more efficiently. There’s AI in photo-editing software, frowns can be turned upside down using Neutral Filters in Photoshop. Skylum Luminar allows a new sky to fall into place in just a few clicks. There’s AI in Lightroom’s selection tools. Additionally, Narrative Select’s image culling software allows you to quickly identify the worst images from a shoot.

The Olympus OM-D E-M1X was one of the first cameras that features deep learning technology to identify subjects and track them more efficiently. Photo credit: Andy Westlake.

The AI that seems to be rattling the industry most is image generation. A variety of machine learning models have been developed that generate digital images from natural language descriptions or prompts. These can be anything and I mean anything. For example: ‘A painting of a fox sitting in a field at sunrise in the style of Claude Monet.’ ‘A Pikachu fine dining with a view towards the Eiffel Tower.’

In January 2021, OpenAI introduced DALL·E. A year later came DALL·E 2, generating more realistic and accurate images with 4x greater resolution. DALL·E 2 has learned the relationship between images and the text used to describe them. It uses a process called diffusion. It starts with a pattern of random dots and gradually alters that pattern towards an image when it recognises specific aspects of that image.

Some industries and practitioners are harnessing what AI has to offer. Jason Allen won first prize in the Digital Arts / Digitally-Manipulated Photography category at the Colorado State Fair for his work titled Theatre d’Opera Spatia created using a text-to-image AI generator Midjourney.

Getty Images has outright banned it. A message sent to all their contributors reads: ‘Effectively immediately, Getty Images will cease to accept all submissions created using AI generative models (e.g., Stable Diffusion, Dall-E 2, MidJourney, etc.) and prior submissions utilizing such models will be removed.’

‘There are open questions with respect to the copyright of outputs from these models. There are also unaddressed rights issues with respect to the underlying imagery and metadata used to train these models. These changes do not prevent the submission of 3D renders and do not impact the use of digital editing tools (e.g., Photoshop, Illustrator, etc.) with respect to modifying and creating imagery.’

Adding a starry sky using Skylum Luminar NEO, turning the image monochrome and tweaking the exposure has done a reasonably convincing job of turning this daylight image into a moon-lit one. Image credit: Angela Nicholson.

Is technology killing photography?

I asked newspapers and magazine Picture Editors if there’s been any memo on dealing with AI generated images. ‘I have not had any instruction/directive regarding the use of AI images, sorry to say. Obviously, we do on occasion use renders to help illustrate pieces (think architectural visualisations) but I imagine you’re alluding to AI passing off as ‘real’…? Can’t really imagine a situation where that would happen with the Telegraph magazine and without a very clear reason and labelling/captioning it’s not something I’d countenance.’

Similarly at The Times. ‘Not yet, but am sure it’s going to be an issue at some point, perhaps more of a worry for the illustrator world, but the more it develops the more real it is and frightening.’

Many I spoke to in the industry are frightened. Is photography dead or dying (again)? Will the technology advance to a point where you just have to type a description of what photograph you require in what style to produce it? Is the rise of the machines imminent and should I be investing in memory alloy rather than memory cards?

I need some extra intelligence and speed dial David Mellor who has a background in Sociology and Philosophy, studied in Cyber Security and Computer Science and is currently undertaking a research project at Coventry University about AI and Smart Cities.

‘In terms of what AI is, the main thing is to dispel the magic around it and to say it’s not these things that people often think it is, the very sci-fi idea of robots in either servile or slave positions. It’s also not the dystopian notion that ‘things’ are going to come and there’s going to be Skynet or The Terminator. It’s tremendously more mundane,’ explains David, to my slight disappointment.

‘One of the problems with AI often is that people get what’s easy and what’s difficult mixed up. People will often say that Garry Kasparov was beaten by a computer in 1997 playing chess or Lee Sedol was beaten at Go by a computer.’

‘It seems to us that they were actually products of human ingenuity and creativity, but you can reduce those kinds of tasks to quite interesting and relatively straightforward mathematical modelling that a computer can do much faster than a human brain can do. That seems like a hard problem but it’s a relatively easy one to solve. What’s harder is getting a robot hand to come in and pick up the chess pieces and put them in place. That would be difficult,’ he adds.

Image Generation

I crack my fingers, exhale and type in my first text prompt: ‘Amateur Photographer magazine editor Nigel Atherton wearing a tutu dancing on a desk in a field.’ After 15 seconds, a selection of four images appears. There is no desk. Image credit: Peter Dench.

Suitably concerned and intrigued by image generation I sat down to give it a try and opt for the free version of Stable Diffusion, launched by a British company, Stability AI. The whole of the English language is at my disposal, my brain is on overdrive. The moment seems monumental, am I adding to a problem, will I be able to crawl back from the void?

I crack my fingers, exhale and type in my first text prompt: ‘Amateur Photographer magazine editor Nigel Atherton wearing a tutu dancing on a desk in a field.’ After 15 seconds, a selection of four images appears. There is no desk. Repeating the prompt gives a new set of images of a distorted Nigel in Arabesque.

The football team I support is currently without a manager, and so I tried to summon our previous and most successful one: ‘Eddie Howe football manager tracksuit pitch side happy, sport,’ it looks like a Bo’ Selecta version of chef Gordon Ramsey. Image credit: Peter Dench.

Undeterred, I continued. The football team I support is currently without a manager, and so I tried to summon our previous and most successful one: ‘Eddie Howe football manager tracksuit pitch side happy, sport,’ it looks like a Bo’ Selecta version of chef Gordon Ramsey. Having recently buried our monarch I prompt: ‘Queen Elizabeth II wearing a crown riding an angel through the gates of heaven.’

Having recently buried our monarch I prompt: ‘Queen Elizabeth II wearing a crown riding an angel through the gates of heaven.’ Image credit: Peter Dench.

Then I tried to recreate some of my own better-known photographs. ‘Peter Dench photographer couple kissing man sick Epsom Derby red bus.’ My career doesn’t feel threatened. It’s evident that they don’t handle basic anatomy very well. You often get six or seven fingers on a hand, three legs or two noses.

‘The question we need to ask about these generated images is, is there something different here happening to what’s happened before? I think there possibly is but it’s not something to do with the magic of AI, it’s something to do with the scale and the reach and impact of what these images can do. We already have a saturated image economy, particularly on social media and people are already struggling to understand the difference between what is or isn’t something that actually happened.’

‘I suppose it could change our perceptions of what is or isn’t part of the historical record. In terms of artistic photography, creating artistic pieces, people should be open about how they created those works,’ suggests Mellor.

DreamStudio is the official team interface and API for Stable Diffusion. It’s faster, has a fancy dashboard and you pay a fee to cover the computing costs to create each image.

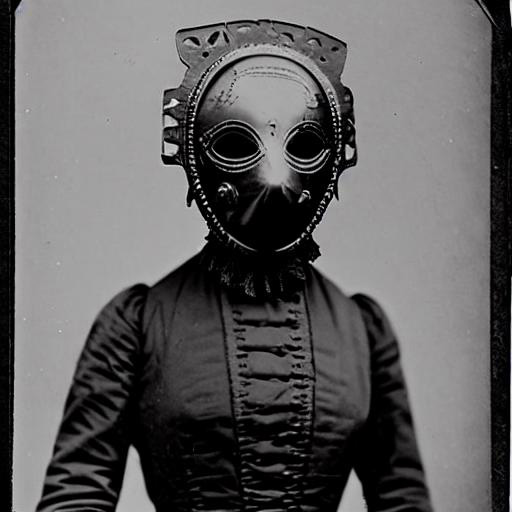

Friend and novelist Sean Thomas spent a few happy hours using DreamStudio for a piece he wrote in the Spectator. ‘Instead of strict instructions, I mentally gave it a target: make something scary. Something truly shocking. I am a thriller writer, after all, and I love horror movies. I also decided to focus on creating photographs, because for me they have a natural creepiness. Consequently, I decided to create some weird new ‘old photographs’. And immediately, I started getting better results.’

‘By this time, I’d realised that the words you use in your prompt are super important. So important that a new word – promptcraft – has been coined to describe the skill. Mustering my best promptcraft I went through a load of provocative words, and eventually alighted on ‘albino’ – and I put it in my prompts. Something in this word – it’s sad associations with fear, loneliness, illness – sent the machine into overdrive. Now it was churning out properly horrible images.’

After less than an hour of my own exploration into image generation I’m frustrated and bored. I can see the potential but ethically it’s questionable. Software like Stable Diffusion scrapes copyrighted images from the web, taking aspects from other people’s work and recombining them. It’s not straight up plagiarism but it feels uncomfortable and certainly doesn’t feel like photography.

Mellor is on point. ‘One of the interesting philosophical questions about it is that it takes away the eventfulness of photography. When we think about photography you often think about it in terms of someone with a camera who was in some place at some time and they recorded something happening, even if it’s a stillness, even if it’s nothing, there’s a kind of an event of a photograph whereas what these do is they have ‘eventless’ photography.’

‘There never was a time when this was created other than someone like me who has absolutely zero photographic skill, typing in text prompts and pumping out an image.’

Latent Bloom

‘All my work is really about conspiracies, which I view, in a strange way, as the reinterpretation of reality into the narrative which is what photography is,’ explains photographer and educator Jack Latham. Conspiracies were very much at our door during the pandemic with the whole world at home on the internet. The way that we researched and communicated was being filtered through the medium of algorithms and suggestions.

Instead of concentrating on a single conspiracy for his project and book, Latent Bloom (Here Press 2020), Latham wanted to focus on how they spread throughout the internet. During lockdown, he bought flowers from his local florist themed for love, celebration sympathy etc. He then photographed them and inputted the images into an algorithm. The algorithm then performed a process of unsupervised deep-learning in order to identify and reimagine details from these images in order to generate something new.

‘I thought not only are flowers important as part of art history, they are also something that are cultivated, grown and sold and different flowers mean different things. I’ve got this program which is constantly running and it generates an infinite amount of pictures and each one is unique. I tweaked the coding slightly.’

It’s fascinating to witness something that can’t see or comprehend what a flower is, analyse hundreds of photographs and try to understand what it’s looking at to generate an image that it thinks you want to see. In Latham’s flipbook and video, flowers slowly morph, adapt, shift and change. The results are beautiful and often biologically flawed, a blur of colour or flower blooming twice on one stem.

‘I became quite disenfranchised with the way in which photography is sometimes spoken about, it’s very cut and paste. There doesn’t seem to be a lot of new ideas in photography. To further demonstrate that I fed the top hundred most famous photographic texts including John Benjamin, John Berger, Susan Sontag into the same algorithm and it generated essays about photography, each one of those is unique. Sometimes it makes perfect sense and they’re actually quite reasonable, at other times they go on crazy tangents.’

To generate the book text, Latham used GPT-2 (an unsupervised deep learning transformer-based language model created by OpenAI in 2019 for the single purpose of predicting the next word(s) in a sentence). GPT-2 was also used in the creation of the text in Jonas Bendiksen’s Book of Veles (GOST 2021), the book about misinformation in the contemporary media landscape which hoodwinked the photography industry.

Drawing the line

Fakery and fiction verses truth and fact have always been hotly debated in photography and a long way since cousins Elsie Wright and Frances Griffiths planted cardboard fairies in a Cottingley garden. When has a photograph ever been authentic? A chemical process of doctoring, dodging, burning, cropping to a digital one. AI has just made fakery easier.

‘The parameters of what we deem tampered with and not tampered with are being pushed further and further. The idea of representing truth but also presenting beauty is a battle most photographers grapple with. A truth is something that 100 people can look at and interpret in their own way and photographs are identical in that sense. It’s what the photographer has chosen to present in what context it is and what manipulations they’ve done to get it to that point before you see it. The idea that it’s kind of arbitrary or realism is patently false,’ says Latham.

‘It becomes quite a boring solution in a way we would need to use something like Blockchain technology or something that creates certification to show the provenance of certain images, where did an image come from, are we looking at something genuine,’ suggests Mellor.

Amazon.com Inc uses machines to automate a job previously held by thousands of its workers to box up customer orders. Online retailer Ocado has developed a robotic system capable of packing bags with all the care and dexterity of a human.

Microsoft’s decision to replace human journalists with robots backfired after the tech company’s artificial intelligence software illustrated a news story about racism with a photo of the wrong mixed-race member of the band Little Mix.

‘There are some areas of photography that could clearly be ‘under threat’. Say product photos or marketing materials. If you have a company which has the ability to put text prompts into an AI image generator and pump out the less bizarre, normal-type images, you might find that companies will cut employment by trying to save money by doing this at a desktop rather than getting someone in to actually take the photographs,’ predicts Mellor.

AI has been around since the 1950s and is likely to be around a century on. It will destroy jobs and create livelihoods. It will succeed and fail. There will be benefits and drawbacks. AI in photography will develop and photographers will respond and adapt.

Can it make you a better photographer or is it the end of ‘real’ photography? AI is just good programming. Another tool for photographers to utilise, to capture something more but still retain the ultimate choices about where to point the camera. For now.

Article: Peter Dench

Related articles:

Skylum launches Luminar Neo: a faster AI-driven photo editor